I chased a rare crash in highly-threaded code. It popped up now and then; earlier fixes didn’t stick. I suspected an advanced concurrency issue. I also asked Gemini, Copilot/Codex, and Claude for help. They agreed with me: surely something subtle—epoll, re-queueing, ownership flags…

We were all wrong—and, importantly, I was wrong in the same way the AIs were. Their analyses reinforced my initial hypothesis. The fact that the static analyzer reported nothing reinforced it even more—after all, that’s “proven non-AI tech.” In hindsight, if I had thought earlier about the limits of these tools (AI and non-AI), I might have changed direction sooner—but I was also primed by experience: in this part of the codebase, bugs are almost always complex.

Root cause (one sentence): a missing state update that let a loop continue after an object had been deconstructed. One line. Classic.

This post focuses on where today’s AI helps and where it doesn’t—and how we’re shaping it so it actually becomes useful in an OSS project. Full technical detail is in the git history and on GitHub; I’m keeping the narrative focused rather than flooding you with internals.

Good news first

- The fix is small (set the loop-exit state after teardown) and is going through the usual review/CI.

- The complete code context lives in the commits; this article is about process and lessons, not every line.

How I actually found it

I discovered the bug during a focused review of a different object—an area no AI had flagged but that I wanted to re-check. That change of viewpoint made the missing state update obvious, even though it wasn’t what I was hunting for. It’s a good reminder that shifting focus often breaks bias loops.

Only after I found and fixed the bug did we put broader measures in place: an analysis of why we missed it and concrete changes to prevent this class from slipping through again. The solution went in first; that triggered the deeper work.

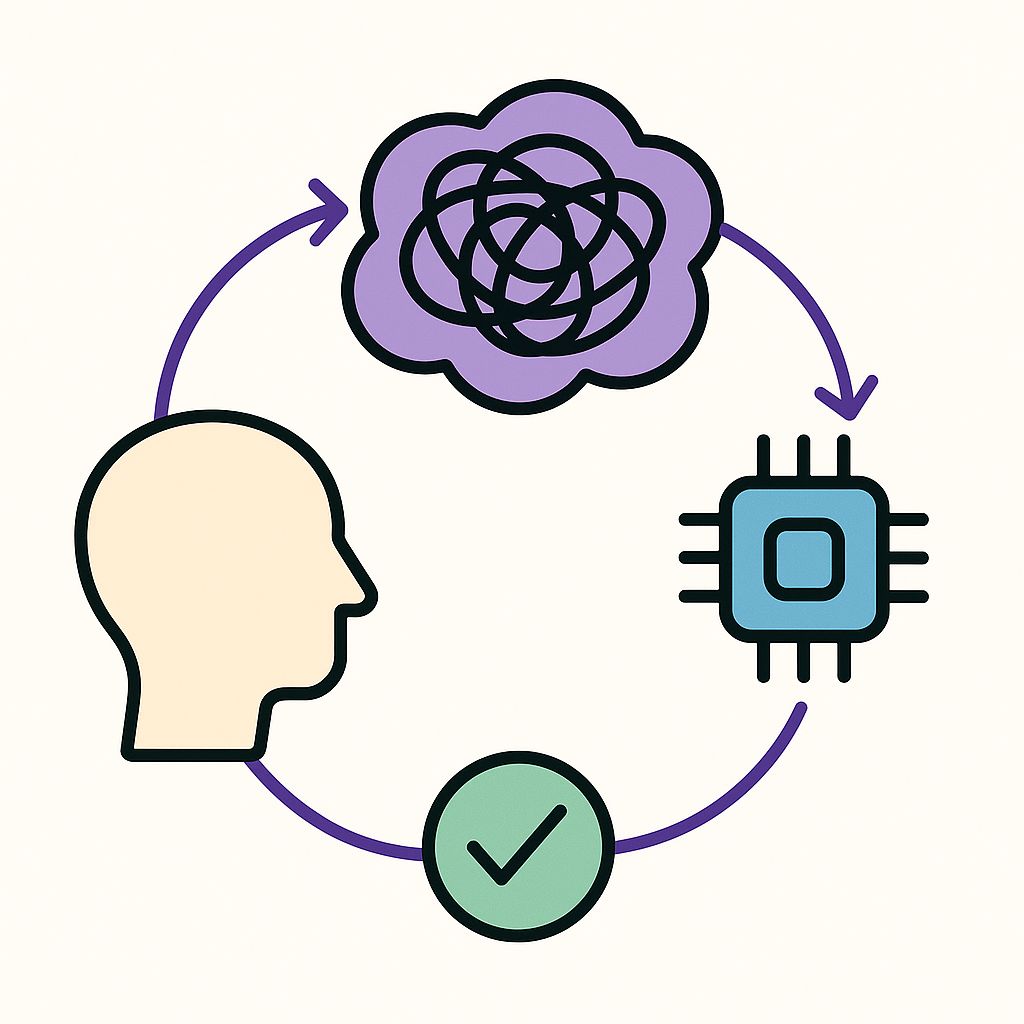

The bias loop: human ↔ AI ↔ tools

- I started complex. In an event/worker architecture, “clever race” is a tempting first guess.

- AIs started complex, too. Multiple models converged on advanced scenarios, which felt right to me.

- Static analyzer silence added weight. The analyzer did not flag the path. We know that; we don’t know why in this case. Because it’s a proven, non-AI tool, its non-report further reinforced my thinking.

- Reinforcement happened. Seeing convergence (AIs + analyzer silence) increased my confidence—without a concrete failing path. To be fair, that’s not unusual for issues that surface only after days of very high-volume throughput; I wrote about such long-tail races years ago.

- Lesson learned: keep tool limits in mind up front—but also recognize that prior experience primes you. I probably would have questioned the complex hypothesis earlier if I’d framed the task as “prove every invalidation path either exits the loop or safely replaces state,” yet I also know why my first instinct was complexity.

Also worth noting: even with clear hint comments like “don’t use after close,” general LLMs only flagged the problem when I explicitly pointed them to the exact variable and code snippet.

A better way: constraints and structure

To make AI helpful, we gave it a job it could do deterministically.

Our custom “rsyslog code review assistant”

I built a strict, single-file reviewer (a custom GPT) with hard rules:

- State-machine first: enumerate all loop exits and post-teardown behavior.

If a path can continue after invalidation → defect. - Threaded reasoning second: only after single-thread invariants are clean.

- Evidence required: output is a compact table plus a minimal counterexample path.

Results so far

- It did flag the missing loop-exit update in this case.

- It’s good at nit-picking, which is exactly what you want in systems code.

- It also produces false positives—expected, since it operates at source-file scope and we keep runtime tight.

- It’s slower than a linter, so we’ll use it as an additional line of defense for hot paths and PRs touching lifetimes.

- There’s an experimental patch-review mode. Early days, but it might be a useful antidote to AI slop—using AI without a clear plan or awareness of limits.

We’ll keep evolving and using this assistant in rsyslog. It already detects this bug class; we’re curious how it performs across more cases.

What will change in rsyslog (beyond this one fix)

Tools are half the story. The other half is making the code easier for humans and machines to reason about.

- Contracts in code: add brief

pre/postcomments with specific names (“after close, the session/descriptor are invalid; either exit the loop or replace them before any further use”). Clear contracts help reviewers and AIs. - Fewer control-flow macros: prefer explicit exits so they’re visible and grep-able.

- Clearer naming & consistent idioms: align function names with behavior (close/free/destroy/deinit), adopt a standard post-teardown pattern (either exit or replace state immediately).

- Deterministic tests for rare paths: small harnesses that force uncommon error branches so sanitizers and analyzers hit them every run.

- Semantic static checks: we’re evaluating repository-specific rules (e.g., clang-tidy/Coccinelle) to flag “invalidate-in-loop without an immediate exit or safe replacement”. We don’t run clang-tidy today; we’re assessing what gives best signal/noise for our codebase.

What off-the-shelf AIs did (and didn’t)

Did well

- Collected plausible hypotheses fast (stale events, re-queue while owned, flag races).

- Produced reasonable “areas to audit” lists.

Didn’t

- Didn’t prove a failing execution path.

- Didn’t enforce the boring invariant: if you invalidate state inside a loop, don’t keep looping with it.

- Even with hint comments, only caught it when pointed directly at the variable and code snippet.

Static analyzer & sanitizers

- Static analyzer: did not report the path here. We don’t know exactly why in this instance; it usually handles path analysis well. Its silence, combined with AI agreement, fed the bias loop.

- Sanitizers: we already run them in CI. They are excellent when a test hits the path; rare paths don’t magically execute. Hence the need for deterministic tests.

Practical guidance (kept intentionally generic)

- Think simple first. If a “complex-sounding” failure lacks a concrete path, double-check the easy invariants – loop exits, teardown contracts, symmetric error handling.

- Use AI with a plan. Don’t ask “what’s wrong?” Ask for a proof obligation: “list where state is invalidated and show me which paths don’t exit or replace it, with a minimal counterexample.”

- Write clean, explicit code. Clear contracts, explicit exits, and consistent naming help humans and AIs.

- Invest in CI where it matters. Add small harnesses that force rare branches so existing tools actually exercise them; evaluate one or two repo-specific static rules for recurring patterns.

A note to AI skeptics in OSS

Skepticism is healthy. Done wrong, AI adds noise. Done right—with constraints, contracts, and clear goals—it can catch real issues and save time.

The core risk here isn’t unique to AI; it’s bias. Human reviewers chase likely complexities. AIs mirror that unless we force them to think differently. Future systems will need to reason like humans and in orthogonal ways. That may require more resources, which is a problem to elaborate about the other day. I’m optimistic we’ll overcome these bias problems—and in the meantime, we can design around them.

This episode was a useful education in a specific AI limit – and it already produced concrete improvements: clearer contracts and naming, explicit exits, deterministic tests, and a specialized reviewer that flags what we care about. Those measures came only after I found the bug; finding it was the trigger to build a more robust, AI-assisted review workflow so that the next one is caught earlier.

Closing

This wasn’t a grand data race; it was a small missing state update with outsized consequences. General-purpose AIs didn’t catch it, and the static analyzer’s silence reinforced my bias. A focused human review—of a different area—did.

The lesson for me: be explicit about tool limits before you start, and design the check accordingly. Combine human focus with machine structure. Clear contracts, explicit exits, targeted CI, a light semantic check or two, and a purpose-built review assistant form a pragmatic path forward. That’s what we’re rolling out in rsyslog—and we’ll report back on how it performs on the next “complex” bug that isn’t.

Note: I wrote this article with AI support – but in an interactive session that only ended when I was fully statisfied. I applied some manual edits for fine tuning later. This took time, but the result is better readable (I am not a native English speaker) and for such an in-depth article it sill saved some time.

Note that this approach to AI is part of the rsyslog project approach to responsible “AI First”, which most importantly we need to understand the technology chances and limits as precisely as possible and be always aware of that. I hope that this article helps other to improve their insight.