I just wanted to share the good news that Andre, LogAnalyzer‘s development lead, today finished implementing a logstream driver for MongoDB. So this nice tool can now also be used to access MongoDB based data. Andre’s lab data was created by rsyslog’s ommongo output module (you currently need rsyslog git master branch to make this work). The logstream driver is not yet really optimized and we do not make full use of the NoSQL capabilites (like different schemas inside a single collection and all this). However, there is lots of exciting stuff on the todo list and I thought I mention a first successful step – and probably a quite important one if you “just want to use that thing” ;) So: good news for a Friday!

next steps for ommongodb

I just wanted to give you a heads-up on my work on ommongodb. During the past couple of days I have converted it to libmongo-client, which gives us a much more solid basis. I have also refactored it to some degree and adopted it to the new v6 config interface. Also, ommongodb will not be supported on pre-v6 platforms. This enables me to use the v6-exclusive features I am building now, especially great JSON and CEE support. Right now, ommongodb uses a very limited field set, and this set is hardcoded (so you can change it, but that means you need to change code).

My next step is to make ommongodb support the base event (as currently being discussed in project lumberjack). I will also provide a capability to add “extra” information from the cee field set. That’s probably not a perfect solution, but the goal is to get ready for some command line tools that are able to extract data from mongodb and thus make the system mimic it is a traditional flat-file syslog format. I have also asked Andre, the lead behind Adiscon LogAnalyzer to consider adding support for MongoDB to loganayzer. I have not yet heard back from him and don’t know exactly about his schedule, but I hope we will be able to make this happen very soon.

Only after that – somewhat hardcoded – work is done I’ll go back and look at JSON and templates in a more native way (very probably also looking at the contributed JSON string generator in more depth).

How to display XML data in Adiscon LogAnalyzer?

Log files usually do not contain XML data. However, this does not mean logs are necessarily non-XML. A prominent example is IHE, which transports XML documents inside syslog message. My post on Adiscon LogAnalyzer 3.3 drew some interesting comments from John Moerke, who sees use for it in an IHE environment.

I have now discussed with Andre on how to integrate such functionality inside the log analyzer. There are obviously a couple of questions to address, but a core question is how to deal with the hierarchic structure that XML offers. Traditionally, log file contain flat name-value pairs, so they can easily be mapped into a two-dimensional array (which is what you see when you look at Adiscon LogAnalyzer). The application is build around this concept. So a fundamental question is how to make sense out of an XML stream. An obvious answer is that we may display some fields in a flat overview, but display the full structure in detail view. This makes sense, but there are ample complexities in things like queries. Plus, it would probably require big changes to the engine.

Putting implementation effort aside for the moment, the big question is how users (you!) would like to work with XML data in a tool like Adiscon LogAnalyzer. Feedback is most appreciated!

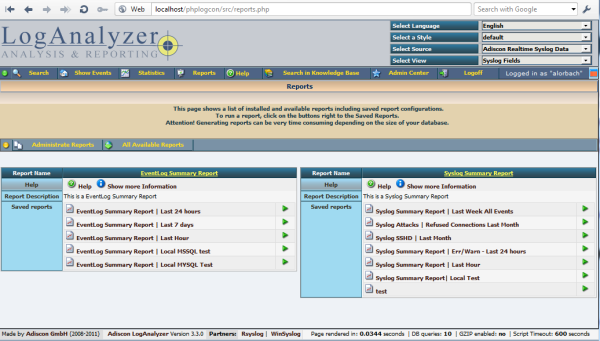

Some sample Adiscon LogAnalyzer Reports…

I thought I provide you a glimpse of which reports Adiscon LogAnalyser can generate. There are some interesting summary reports, like the Windows Event Log Summary Report and the Syslog Summary Report. Of course, you can customize these reports based on the usual filtering capabilities. As an example, have a look at the syslog summary report just for “today”. You can play with these options life at the Adiscon LogAnalyzer demo site.

Please note that we will be working on more reports in the months to come. Also, if you miss some report, you may consider sponsoring its development. This can be quite cost-effective compared to the many quite expensive solutions you otherwise need to use — or your programming time ;-)

Adiscon LogAnalyzer 3.3.0 beta is out

Adiscon’s open source log analysis frontend LogAnalyzer has grown with some exciting new features. Most importantly, report generation speed has been much increased. This was made possible via tighter integration of the report logic with the actual log source (database or file). As a result, all reports are generated in considerably less time and require far fewer system resources to complete. Along the same lines, Adiscon LogAnalyzer now offers suggestions for indexing database sources. If it finds room for improvement, new indexes are automatically suggested. This results in overall improved speed throughout the application.

Also, finally a long-due user interface improvement has been made: to access the reporting feature, users needed to access the admin panel. This was kind of well-hidden and cumbersome. In 3.3.0, reports are directly available from Adiscon LogAnalyzer’s main panel. With this change, some users may even discover the reporting feature for the first time. The screenshot below gives you a sneak preview of the new interface.

Best of all, the new version has brought some under-the-hood improvements that we will utilize in the future to generate some really exciting new reports. Stay tuned, there is much more to come…

And finally let me say that work with the LogAnalyzer team to improve integration into rsyslog and the Adiscon’s Windows logging components. We are trying very hard to provide an easy to use, integrated solution.

syslog normalization

I am working on syslog normalization for quite some years now. A couple of days ago, David Lang talked to me about syslog-ng’s patterndb, an approach to classify log messages and extract properties from it.

I have looked at this approach, and it indeed is promising. One ingredient, though, is missing, that is a directory of standard properties (like bytes sent and received in traffic logs). I know this missing ingredient very well, because we also forgot it until recently.

The aim to normalize log data is far from being new. Actually, I think it is one of the main concerns in log analysis. Probably one of the first folks who thought seriously about it was Marcus Ranum, who coined the concept of “artificial ignorance”, meaning that we can remove those messages from a big pile of logs that we know to be uninteresting. But in order to do that correctly, you need to know how exactly they look. And this is where log normalization comes in. I have written an in-depth paper in 2004, title “On the nature of syslog data“. The version officially published claims “work in progress”, but it still has all the juicy details.

Internally, we implemented this approach in our MonitorWare products a little bit later. For example, it is used inside the “Post Process Action” in WinSyslog (Michael also wrote a nice article on how to parse log messages with this action). While this was a great addition (and is used with great success), I failed to get enough community momentum to build a larger database of log messages that could be used as a basis for large scale log normalization. One such – largely failed for syslog – approach is the event knowledge base.

However, I did not give up on the general idea and proposed it wherever appropriate. The last outcome of this approach is the soon-to-be-released Adiscon LogAnalyzer v3, which uses so-called message parsers to obtain useful information from log entries. Here, I hope we will be able to gain more community involvement. We already got two message parsers contributed. Granted, that’s not much, but the ability to have them is so far little known. With the release of v3, I hope we get more and more momentum.

The syslog-ng patterndb approach brings an interesting idea to this space: as far as I have heard (I generally do NOT look at competing code to prevent polluting my code with things that I should not use), they use radix trees to parse the log messages. That is a clever approach, as it provides a solution for much quicker parsing large amounts of parse templates. This makes the approach suitable for real-time normalization of an incoming stream of syslog data.

Adiscon LogAnalyzer, by contrast, uses a regex-based approach, but that primarily for simplicity in an effort to invite more contributions (WinSyslog has a far more sophisticated approach). In Adiscon LogAnalyzer we began to become serious with identifying what a property actually means. While we have a fixed set of properties, with fixed semantics, in both WinSyslog, MonitorWare Agent and rsyslog, this set is rather limited. The Windows product line supports ease of extension of the properties, but does not provide standard IDs for those properties.

In Adiscon LogAnalyzer, we have fixed IDs for a larger set of properties, now about 50 or so. Still, that set is very small. But we created it with the intention to be able to map various “semantic objects” from different log entries to a single identity. For example, most firewall logs will contain a source and destination IP address, but almost all firewalls will use different log message formats to do that. So we need to have different analyzers to support these native formats, for example in reports. In Adiscon LogAnalyzer, we can now have a message parser “normalize” these syslog entries and map the vendor-specific format to the generic “semantic object”. Thus the upper layers (like views and reports) then work on these normalized semantic objects and do not need to be adopted to each firewall. This needs only be done at the parser level.

Such a directory of semantics objects would be very useful in my humble opinion. We are currently working on making it publicly available, all this in the hope for a community to involve itself ;) If we manage to get a large enough number of log and/or parser contributions, we may potentially be able to make Adiscon LogAnalyzer an even better free tool for system administrators.

And as there is hope that this will finally succeed, I have begun to think about a potential implementation inside rsyslog. It doesn’t sound very hard, but still requires careful thinking. One thing I would like to see is a unified approach that covers at least rsyslog and Adiscon Loganalyzer, and hopefully the Windows tools as well.

Another very good thing is that there already is a standard for providing standard semantical objects: during the IETF syslog standardization effort, I pressed hard for so-called structured data elements. I managed to get them into the final RFC. These structured data elements are now the key for conveying the log information once it is normalized: the corresponding name-value pairs can easily be encoded with it.

I hope we will finally able to succeed on this road, because I think this would be of tremendous benefit for the syslog community.

phpLogCon becomes Adiscon LogAnalyzer

I have blogged the past days about Adiscon LogAnalyzer. We are now gradually rolling out the new site. So I thought it is a good idea to reproduced my “official announcement” on the blog as well:

As in all things, there is a certain fashion in open source project names as well. For a long time, “php*” was a great name for php-based open source solutions. However, nowadays these somewhat bulky names have been replaced by “more streamlined” names.

I personally think that dropping the “php” part makes it somewhat easier to speak and write about these projects. So we decided it was right to drop “php” from “phpLogCon”. But was “LogCon” the ultimate name for a tool to search, analyze and (starting with v3) report on network event logs? A quick discussion within our group as well as with some external buddies turned out that “LogCon” is probably pretty meaningless. Even if one deciphers “Con” for “Console” – what does it mean to be a “Console” in this context? Not an easy to answer question. Bottom line: “LogCon” is pretty meaningless.

So we thought we do “the right thing” and rename the project before it becomes even more widely spread. The later you do a name change, the more painful it is. That made us think about good names. We ended up with “LogAnalyzer”, because analysis is the dominant use case for this tool (especially if you think of reports as being part of the analysis ;) ). Another quick search made us aware that there are (of course) lots of “LogAnalyzers”. And, of course as well, all second level domains where taken.

Bare of an expensive legal adviser, we made the decision to boldly name the project “Adiscon LogAnalyzer“, aka. “the log analyzer (primarily) written by Adiscon”. With that approach we use our company name (which obviously legally belongs to us) together with the generic term “LogAnalyzer”. That is done in the hope that it will resolve any legal friction that otherwise may occur. For the very same reason you will see us consistently referring to “Adiscon LogAnalyzer”.

We are aware, however, that this implies some other cost: A project with a company name inside it does sound a bit like a purely commercial project. On the other hand, that seems to be no problem with the big players, like “Red Hat Linux” or “SuSe Linux”. So we hope that the company part inside the name will not have a too-bad effect on this project.

We pledge that Adiscon LogAnalyzer will always be a free, open source project. And the GPLv3 we use is your guarantee for that.

In addition to the core Adiscon LogAnalyzer, Adiscon will also provide some non-GPLed components in the future. And we hope that others will do that as well. Our sincere hope is that Adiscon LogAnalyzer will evolve to a framework where many third parties can plug in specific functionality. Consequently, we have added a plugin directory to the new site, and some third-party written message parsers already populate it.

So – phpLogCon has not only a new name and a new site, it is also more active than ever and eager to solve the log analysis and reporting needs for a growing community. Please help spread the word!

Why is writing good user doc such a problem…?

… for me, I should add. Today, I ran about a post on the rsyslog mailing list where a user (rightfully!) complains that rsyslog documentation is confusing.

I really don’t like the idea that users are having a hard time because they can not get pretty basic things done. Unfortunately, there are a number of reasons for this: one, of course, is lack of time. I am rather busy developing new functionality and besides rsyslog I have also other chores today at Adiscon, like helping with the next and really great release of Adiscon LogAnalyzer, a free and open source solution for searching, analyzing and reporting on network event data and syslog (yeah, and creating buzzwords, of course…). But there is a more subtle issue:

I am doing logging and syslog for over 10 years now (close to 15, if I remember correctly). I have seen so much in the logging world, that I can hardly think of the time when I did not know what PRI or TAG or even MSG was, what are the (disadvantages) or simplex vs. duplex comm modes, and what makes 3195 better (or worse) than 3164 or 5424 ;)

In short: it is pretty hard for me to go back to the roots and envision what somebody new to syslog needs to know AND in what order! I am trying my best, but writing basic-level articles (and documentation) requires considerable effort. A good article, well-thought out (like a 4-page journal article) can easily take 4 to 5 days to create. Even then, I need help from other folks when I need to write for entry level folks (and there is nothing bad with being entry-level: everybody is at some point in time). Here, of course, the time resource problem hits again: I usually can not afford this effort “just” to create doc.

With the rsyslog cookbook I started another approach: there I focus on very specific environments. I don’t really like this idea, because it does not tell people what exactly they are doing. But still the past weeks have proven this to be a useful approach. But I also notice that the cookbook is only useful if the configuration matches exactly what the user wants – otherwise users are lost. I guess that’s due to not really understanding what happens. The good thing about the cookbook is that it requires relatively little effort. Most samples were created within an hour, which seems to be acceptable for something that can be reused.

The ultimate solution would be that users write content themselves. The rsyslog knowledge base (or forum, as you may call it) is most successful in this regard. But it is hard to navigate and hard to find a solution – you often need to wade through various posts before you get to the (often simple) solution. The rsyslog mailing list is another excellent resource, especially as other folks actively help supporting rsyslog. This is a very important for me and the project, and I appreciate it very much. Unfortunately, the con again is that the mailing list makes it hard for new users to find already existing solutions (that is is being mirrored to various aggregators helps a bit, but only so much…).

The ultimate solution, I thought, was the rsyslog wiki and we see some very nice article inside it. Unfortunately, very few users contribute to the wiki. Just think an how enormous knowledge reservoir this could be if only every 5th user who got help would take a few minutes off his time to craft a quick wiki article describing what he does, why, and how it works. Unfortunately, most users seem to not have this time. I can understand that, I guess they have pressing schedules at well. And these schedules may already be stressed by the extra time they needed to find the solution for an obviously simple thing…

So this is not a good situation, but I can currently not do much more than to keep working on the cookbook and ask everyone to contribute documentation. For the long-term success, I think it is vital for rsyslog to make it power available to all users. Good doc is one necessity, a better config format another one (but I won’t elaborate about this in today’s post again ;)).

is a third-level domain suspect to google?

If you follow this blog, you’ve probably already heard that we are doing a name change for phpLogCon: it will soon be known under the name Adiscon LogAnalyzer (with the Adiscon in front of the “real” name to ease potential legal issues).

Among others, that means we need to change the web site. Not surprisingly, no second-level domain with loganalyzer in it was available at the time we searched. Most of them, of course, been taken by domain spammers. So we settled for the loganalyzer.adiscon.com name. As I found out yesterday in Google Webmaster Tools, that this may cause some troubles. Google provides a “Change of Adress” tool that is meant to be used in situation.

However, I discovered that this tool does not work with third-level domains. All I see when I try to use that tool is the message “Setting is restricted to root level domains, only” (as a slight technical side-note, it should say “second level domain” as I don’t think it works for com, net, org, … only ;)).

While browsing the google help forum, I found that others seems to have similar problems. For example, people in the UK, where everything is a third-level domain (for example, .co.uk is what .com is for the international Internet).

Given this stance, I wonder if google punishes third-level domain sites in any other way. If so, our decision to move to the new site may not be a good one. I have posted a question in the Google help forums. I guess I will not get a definite response, but maybe one can read between the lines.

I will keep you posted, also on the overall progress of the name/site switch. We have now entered the “hot phase”, meaning that we actually intend to roll over to the new site within the next couple of days. Stay tuned for more news and more features.

syslog data modeling capabilities

As part of the IETF discussions on a common logging format for sip, I explained some sylsog concepts to the sip-clf working group.

Traditionally, syslog messages contain free-form text, only – aimed at human observers. Of course, today most of the logging information is automatically being processed and the free-form text creates ample problems in that regard.

The recent syslog RFC series has gone great length to improve the situation. Most importantly, it introduced a concept called “Structured Data”, which permits to express information in a well-structured way. Actually, it provides a dual layer approach, with a corase designator at the upper layer and name/value pairs at the lower layer.

However, the syslog RFC do NOT provide any data/information modeling capabilities that come with these structured data elements. Their syntax and semantics is to be defined in separate RFCs. So far, only a few examples exist. One of them is the base RFC5424, which describes some common properties that can be contained in any syslog message. Other than that, RFC5674, which describes a mapping to the Alarm MIB and ITU perceived severities and RFC5675, which describes a mapping to SNMP traps. All of them are rather small. The IHE community, to the best of my knowledge, is currently considering using syslog structured data as an information container, but has not yet reached any conclusion.

Clearly, it would be of advantage to have more advanced data modeling capabilities inside the syslog base RFCs, at least some basic syntax definitions. So why is that not present?

One needs to remember that the syslog standardization effort was a very hard one. There were many different views, “thanks” to the broad variety of legacy syslog, and it was extremely hard to reach consensus (thus it took some years to complete the work…). Next, one needs to remember that there is such an immense variety in message content and objects, that it is a much larger effort to try define some generic syntaxes and semantics (I don’t say it can not be done, but it is far from being easy). In order to get the basics done, the syslog WG deciced to not dig down into these dirty details but rather lay out the foundation so that we can build on it in the future.

I still think this is a good compromise. It would be good if we could complement this foundation with some already existing technology. SNMP MIB encoding is not the right way to go, because it follows a different paradigm (syslog is still meant to be primarily clear text). One interesting alternative which I saw, and now evaluate, is the ipfix data modeling approach. Ideally, we could reuse it inside structured data, saving us the work to define some syslog-specific model of doing so.

The most important task, however, is to think about, and specify, some common “information building blocks”. With these, I mean standard properties, like source and destination ID, mail message id, bytes sent and received and so on. These, together with some standard syntaxes, can greatly relieve problems we face while consolidating and analyzing logs. Obviously, this is an area that I will be looking into in the near future as well.

It may be worth noting that I wrote a paper about syslog parsing back in 2004. It was, and has remained, work in progress. However, Adiscon did implement the concept in MonitorWare Console, which unfortunately never got wider exposure. Thinking about it, that work would benefit greatly from the availability of standardized syslog data models.